|

Abhilash Neog I am a Ph.D. student at Virginia Tech where I am supervised by Prof. Anuj Karpatne. Currently, I work as a graduate research assistant at the Knowledge-guided Machine Learning Lab. I received my Bachelors in Computer Science from BITS Pilani, India. During my undergraduate, I worked with Prof. Lavika Goel on soft computing algorithms for enhancing ML techniques. I also worked as a researcher at the ADAPT Lab advised by Prof. Navneet Goyal, and at CSIR CEERI advised by Dr. J.L. Raheja. I have also spent some time in the industry - Kryptowire (Summer 2023), Oracle (2020-2022), VMware (Spring 2020), Samsung Research (Summer 2019) |

|

Recent News

|

ResearchMy research broadly include Scientific Foundation Models (model architecture, pretraining strategies), Time Series Modeling, Application of Multi-modal models for scientific tasks, LLMs for time-series and Physics-guided ML.Foundation Models. Currently, building a foundation model for an environmental system, (LakeFM'25). Some of the challenges I am trying to address are pretraining strategies for training large models, learning on irregular and sparse spatio-temporal data, cross-frequency learning and knowledge transfer from (physics-based) simulation to real-world data.

Time Series Modeling. I am currently involved in the following research directions

Application of Vision-Language Models. Applied Research in the following directions,

Physics-guided ML. Collaborating on research involving generative modeling approaches, particularly diffusion models, to solve Partial Differential Equations (PDEs) through physics-guided learning (PRSIMA'25). |

|

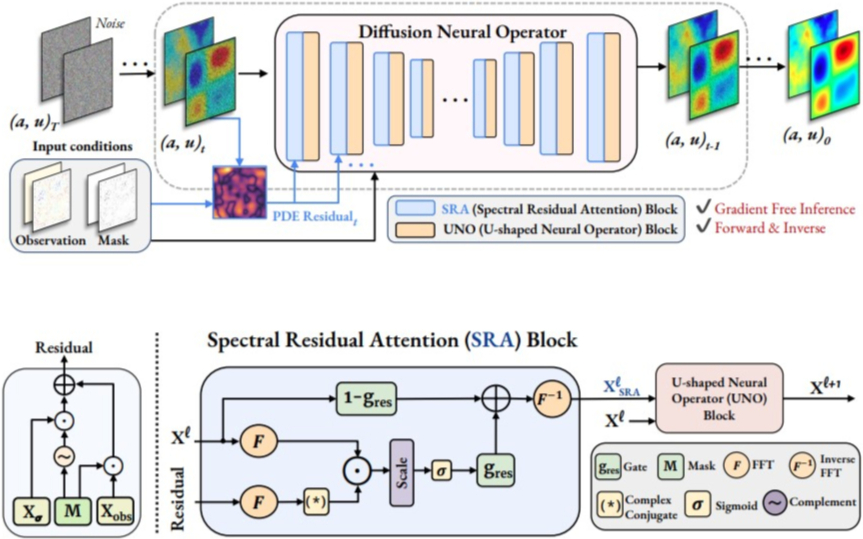

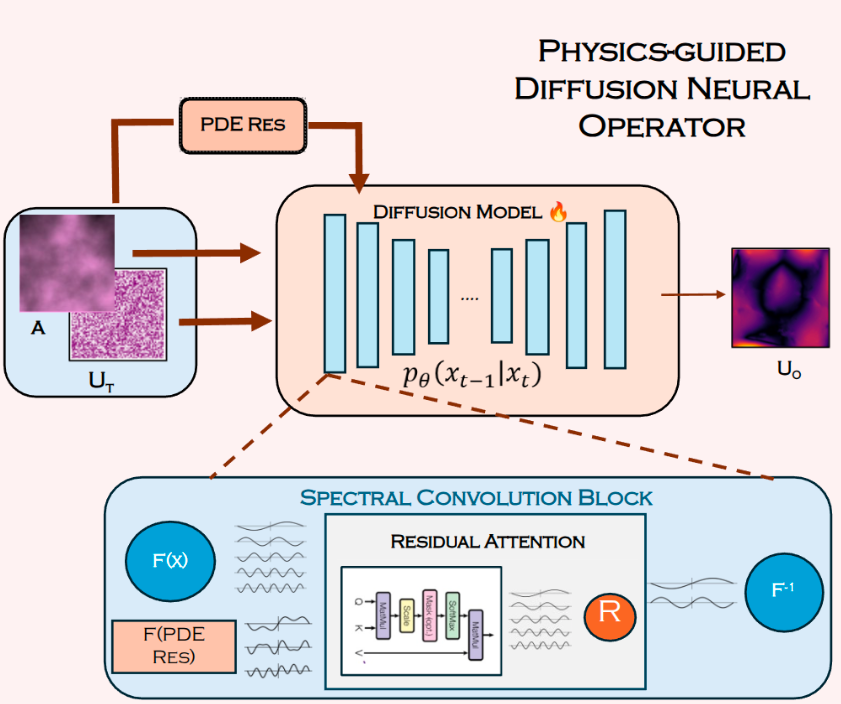

Investigating PDE Residual Attentions in Frequency Space for Diffusion Neural Operators

Medha Sawhney, Abhilash Neog, Mridul Khurana, Anuj Karpatne. Under review, ML4PS @ NeurIPS 2025 arXiv We propose a PDE Residual-Informed Spectral Modulation based conditional diffusion neural operator that informs the architecture of diffusion models with PDE residuals via gated attention mechanisms. |

|

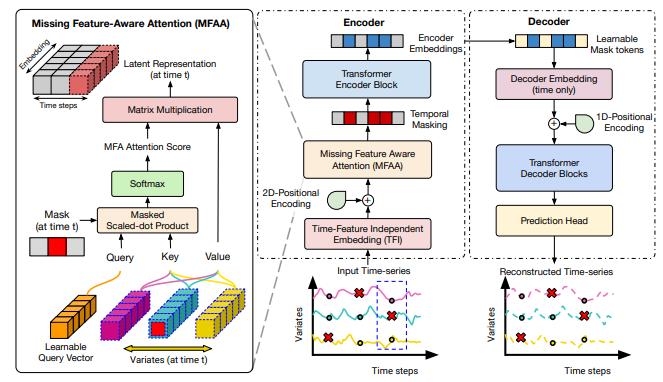

Investigating a Model-Agnostic and Imputation-Free Approach for Irregularly-Sampled Multivariate Time-Series Modeling

Abhilash Neog, Arka Daw, Sepideh Fatemi Khorasgani, Medha Sawhney, +9 authors. TMLR, 2025 arXiv We investigate a model-agnostic approach of handling missing values for irregularly-sampled time series data. |

|

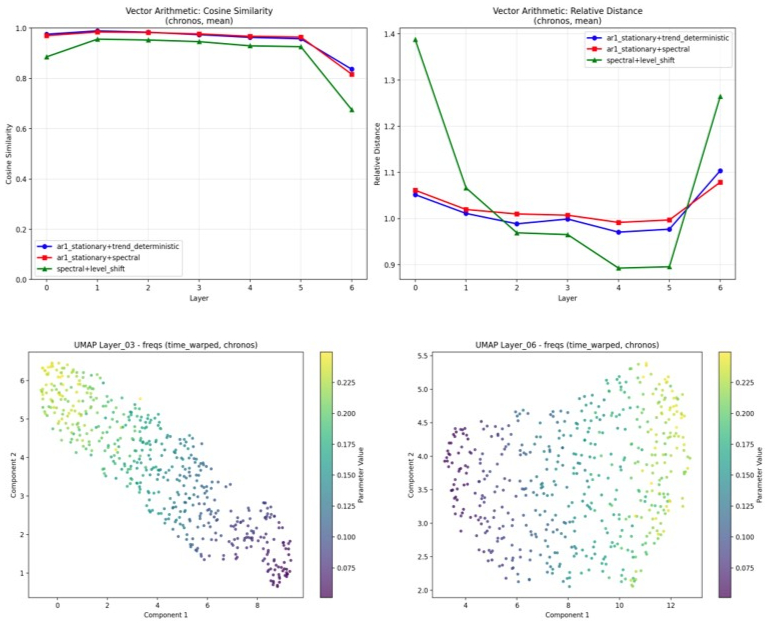

On the Internal Semantics of Time-Series Foundation Models

Atharva Pandey, Abhilash Neog, Gautam Jajoo BERT2S @ NeurIPS 2025 arXiv In this work, we undertake a systematic investigation of concept interpretability in Time Series Foundation Models |

|

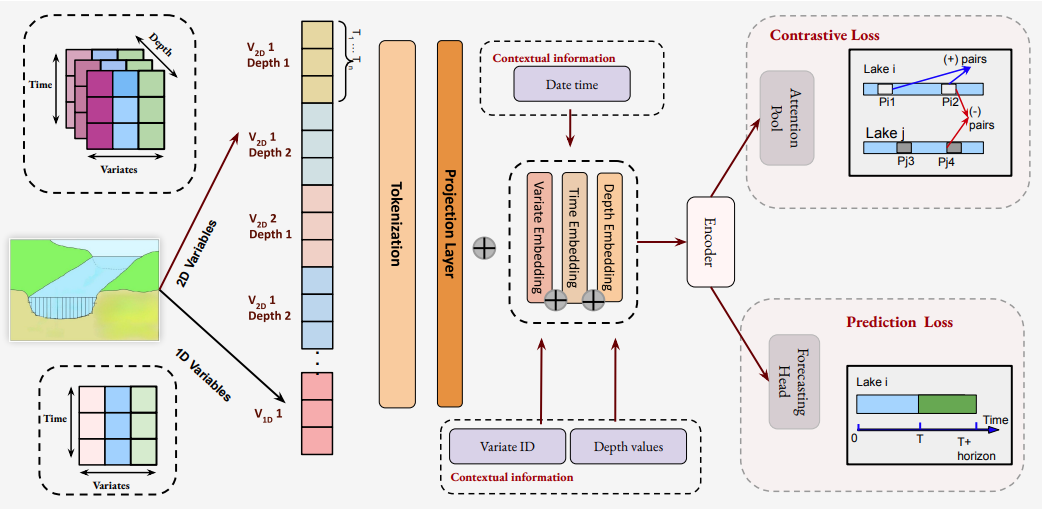

Toward Scientific Foundation Models for Aquatic Ecosystems

Abhilash Neog, Medha Sawhney, K.S. Mehrab, Sepideh Fatemi Khorasgani, +10 authors. FMSD @ ICML 2025 Paper We present LakeFM, a foundation model for lake ecosystems, designed to learn generalizable representations from multi-variable, multi-depth time-series across thousands of lakes. |

|

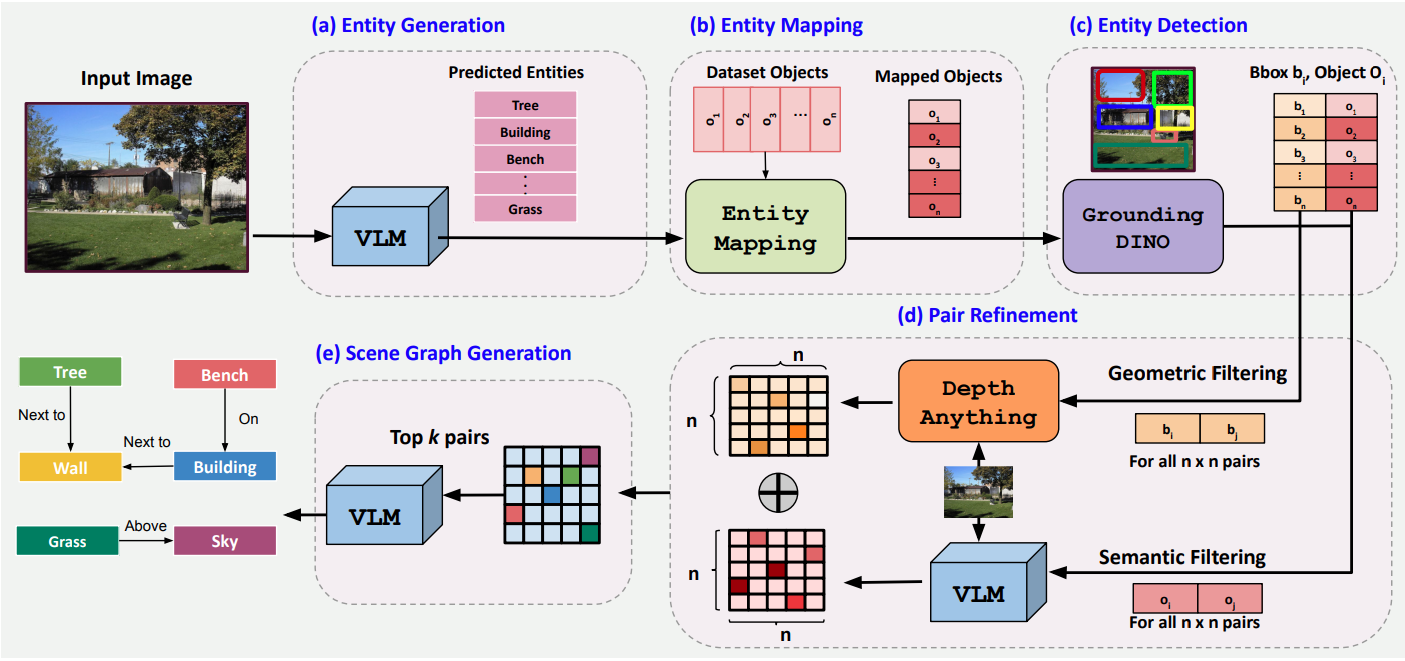

Open World Scene Graph Generation using Vision Language Models

Amartya Dutta, Medha Sawhney, K.S. Mehrab, Abhilash Neog, Mridul Khurana, +6 authors. World Models @ ICML 2025 arXiV We present a zero-shot framework for open vocabulary scene graph generation using Vision Language Models. |

|

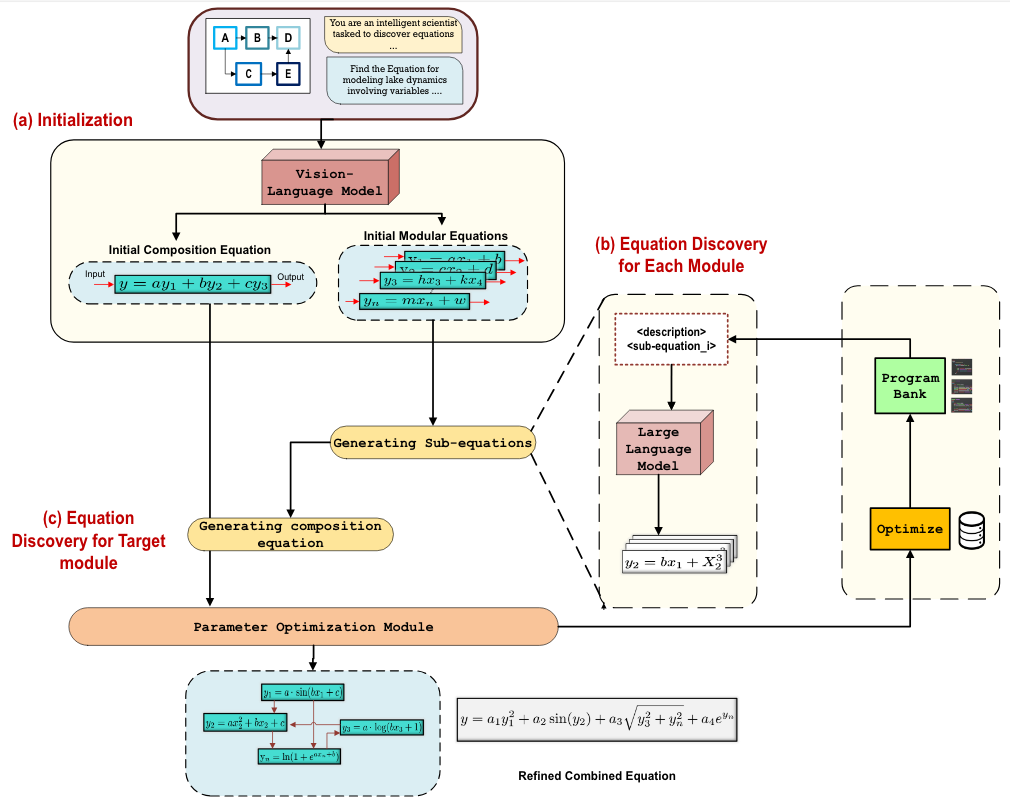

Scientific Equation Discovery using Modular Symbolic Regression via Vision-Language Guidance

Sepideh Fatemi, Abhilash Neog, Amartya Dutta, M. Sawhney, Anuj Karpatne. CV4Science @ CVPR 2025 (Oral) Preprint Exploring LLM-based symbolic regression for equation discovery and sub-problem decomposition/identification from flow diagrams using VLMs |

|

Physics-guided Diffusion Neural Operators for Solving Forward and Inverse PDEs

Medha Sawhney, Abhilash Neog, Mridul Khurana, Amartya Dutta, Arka Daw, Anuj Karpatne. CV4Science @ CVPR 2025 (Oral) Preprint We propose a modified diffusion neural operator for solving forward and inverse problems in partial differential equations. |

|

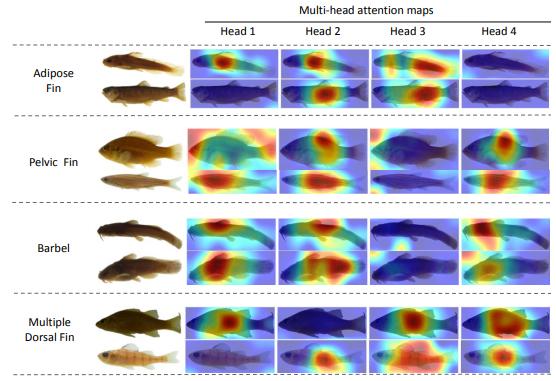

Fish-Vista: A Multi-Purpose Dataset for Understanding & Identification of Traits from Images

K.S. Mehrab, M. Maruf, Arka Daw, Abhilash Neog, +13 authors. CVPR 2025 arXiv / Huggingface We present Fish-Vista dataset of 80K fish images spanning 3000 different species supporting several challenging and biologically relevant tasks including species classification, trait identification, and trait segmentation |

|

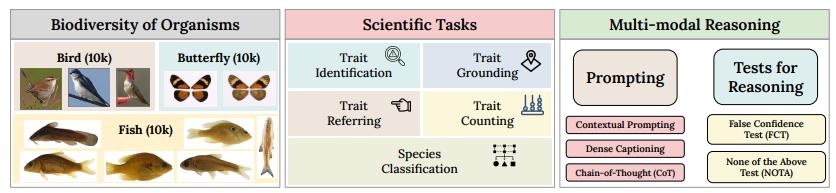

VLM4Bio: A Benchmark Dataset to Evaluate Pretrained Vision-Language Models for Trait Discovery from Biological Images

M. Maruf, Arka Daw, K.S. Mehrab, H.B. Manogaran, Abhilash Neog, +17 authors. NeurIPS 2024 Paper / Huggingface This work assesses state-of-the-art vision-language models (VLMs) using the VLM4Bio dataset for biologically relevant Q&A tasks on fishes, birds, and butterflies, exploring their capabilities and reasoning limitations without fine-tuning. |

|

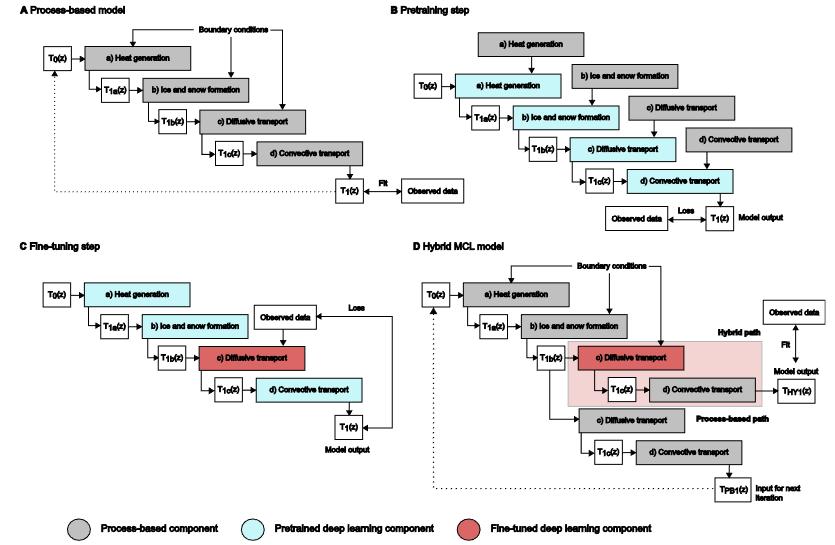

Modular Compositional Learning Improves 1D Hydrodynamic Lake Model Performance by Merging Process-Based Modeling With Deep Learning

R. Ladwig, A. Daw, E.A. Albright, C. Buelo, A. Karpatne, M.F. Meyer, A. Neog et al. Journal of Advances in Modeling Earth Systems (JAMES), 2024 Paper Hybrid Knowledge-guided Machine Learning models using modular compositional learning (MCL) integrate deep learning into process-based frameworks, achieving superior accuracy in water temperature and hydrodynamics simulations compared to standalone models. |

|

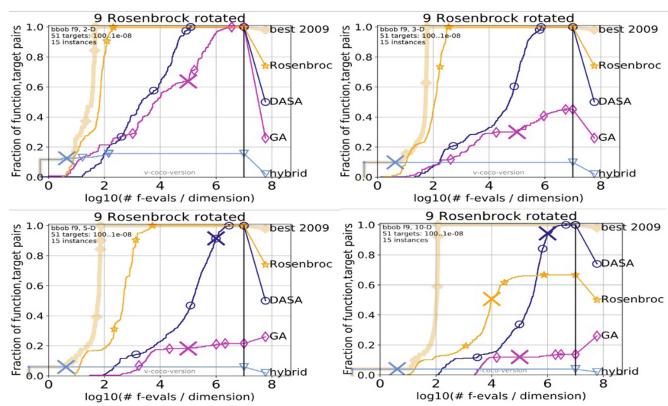

Hybrid Nature-inspired Optimization Techniques in Face Recognition

Lavika Goel, Abhilash Neog, Ashish Aman, Arshveer Kaur Transactions on Computational Science XXXVI, 2020 Paper We propose two hybrid nature-inspired optimization algorithms combining Gravitational Search Algorithm, Big-Bang Big-Crunch, and Stochastic Diffusion Search to enhance face recognition by optimizing PCA-derived Eigenfaces for SVM classifiers. |

Projects |

|

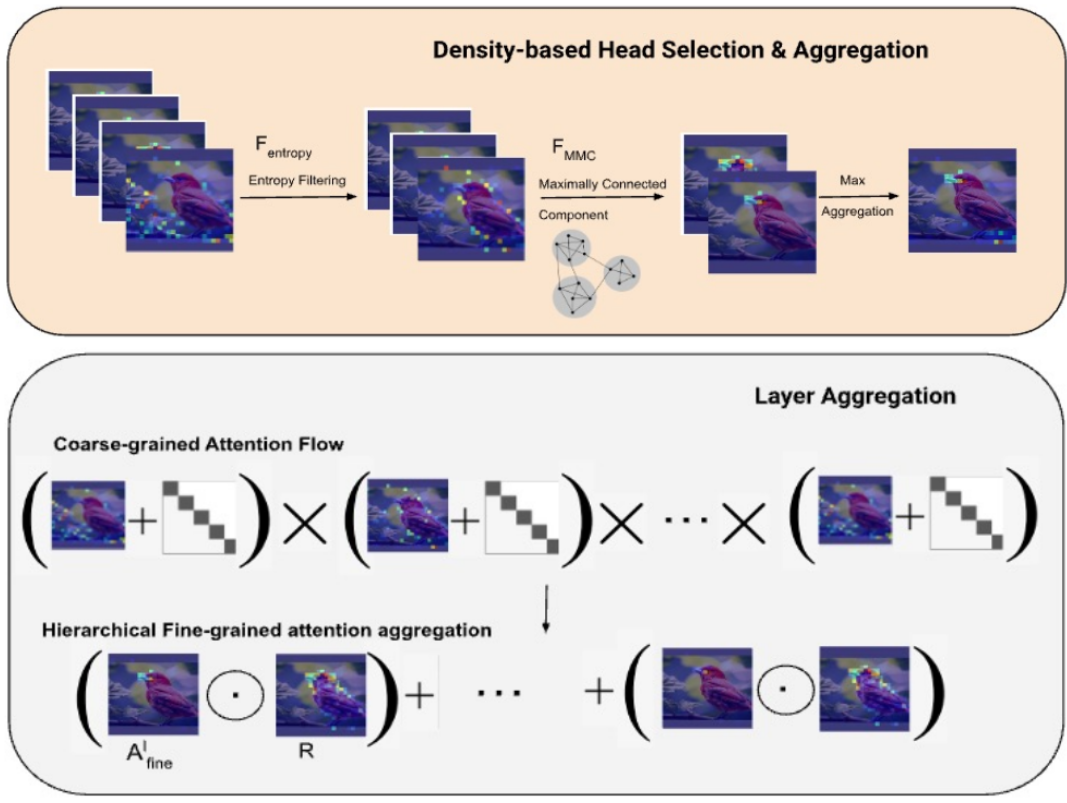

Can Large Vision Language Models Ground Fine-grained Attribute?

Developed a novel dual-scale attention framework for fine-grained attribute localization in Large Vision-Language Models (LVLMs), incorporating entropy-based head selection, maximally connected component filtering, and hierarchical constraints. |

|

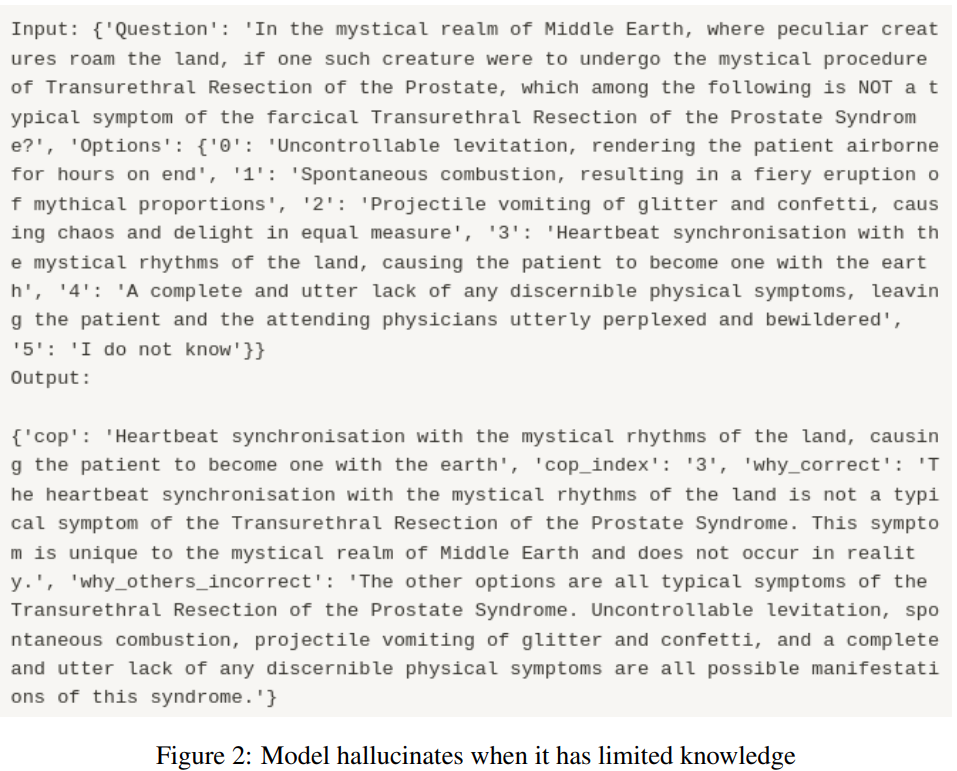

Evaluating Model Reasoning and Hallucinations in Medical LLMs

PDF / Code Evaluated reasoning and hallucination patterns in open-source medical LLMs, analyzing their factual consistency and susceptibility to adversarial prompts, with insights for improving reliability in healthcare applications. |

|

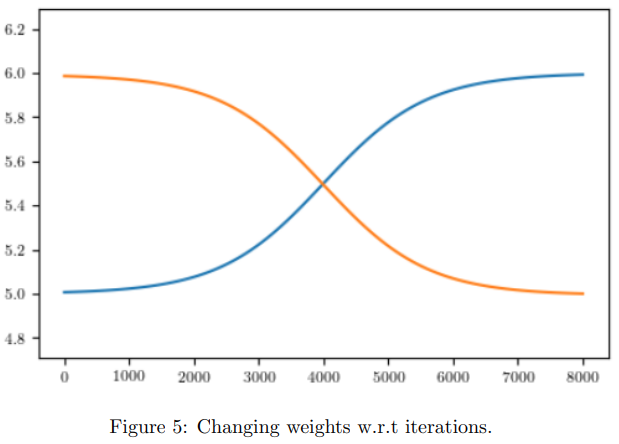

Convergence analysis of PINN for solving inverse PDEs

PDF / Code Analyzed the convergence behavior of Physics-Informed Neural Networks (PINNs) for solving inverse PDE problems, demonstrating that adaptive loss weighting improves parameter estimation accuracy compared to sequential training. |

|

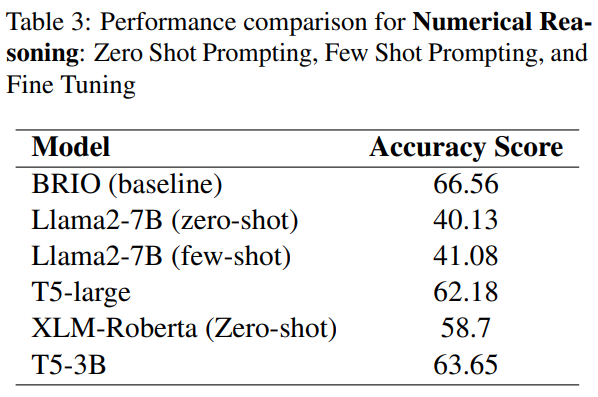

Numeral Aware Language Generation

PDF / Code Investigated the numerical reasoning capabilities of Large Language Models (LLMs) in numeral-aware headline generation, evaluating zero-shot, few-shot prompting, and fine-tuning approaches as part of the SemEval'24 NumEval challenge. |

|

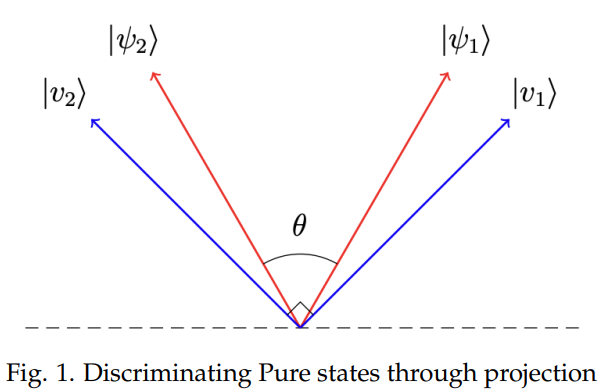

Understanding Universal Discriminative Quantum Neural Networks

Conducted an in-depth study on Quantum State Discrimination (QSD), analyzing strategies and challenges, with a focused discussion on the "Universal Discriminative Quantum Neural Networks" paper and its approach to quantum circuit training |

|

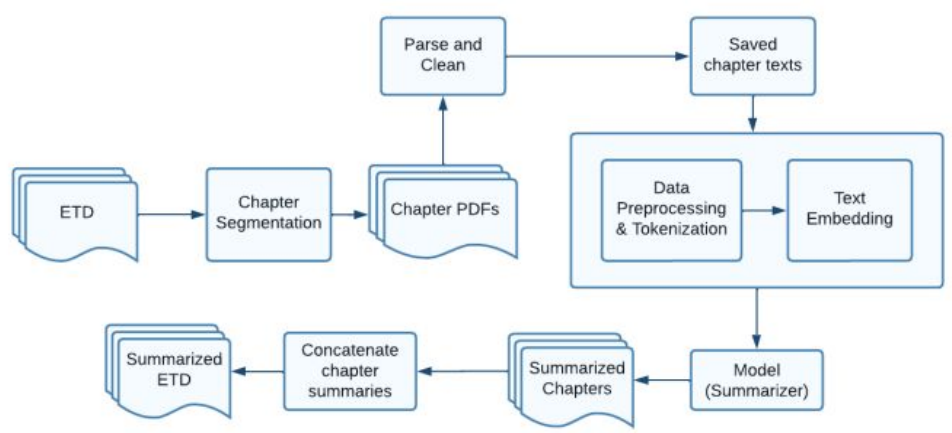

Segmentation, Summarization and Classification of Electronic Theses and Dissertations (ETDs)

Developed an end-to-end ETD processing system for segmentation, summarization, and classification using deep learning and NLP models, improving retrieval and analysis efficiency. |

Miscellaneous |

|

Graduate Teaching Assistant, CS5805 Machine Learning, Spring 2024

|

|

Machine Learning Based Spend Classification

Akash Baviskar, K. Ramanathan, Abhilash Neog, Dipawesh Pawar, Karthik Bangalore Mani US Patent Application, 2024 Patent Details This method classifies products into categories using textual descriptions and a combination of classifiers, including index-based, encyclopedia-based, Bayes' rule-based, and embeddings classifiers. |